Module contents

The Autotuning Methodology package is split into various modules based on functionality.

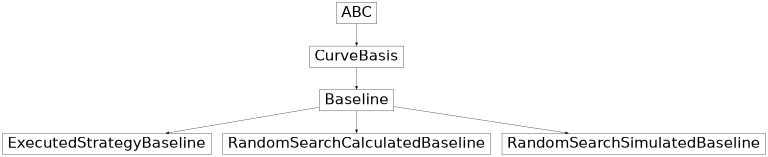

Baseline module

Code for baselines.

- class Baseline[source]

Bases:

CurveBasisClass to use as a baseline against Curves in plots.

- abstractmethod get_standardised_curve(range: ndarray, strategy_curve: ndarray, x_type: str) ndarray[source]

Substract the baseline curve from the provided strategy curve, yielding a standardised strategy curve.

- abstractmethod get_standardised_curves(range: ndarray, strategy_curves: list[ndarray], x_type: str) tuple[source]

Substract the baseline curve from the provided strategy curves, yielding standardised strategy curves.

- label: str

- searchspace_stats: SearchspaceStatistics

- class ExecutedStrategyBaseline(searchspace_stats: SearchspaceStatistics, strategy: StochasticOptimizationAlgorithm, confidence_level: float)[source]

Bases:

BaselineBaseline object using an executed strategy.

- get_curve(range: ndarray, x_type: str, dist=None, confidence_level=None) ndarray[source]

Get the curve over the specified range of time or function evaluations.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of the x-axis range (either time or function evaluations).

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of NDArrays with NaN beyond limits. See

get_curve_over_fevals()andget_curve_over_time()for more precise return values.

- get_curve_over_fevals(fevals_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over function evaluations.

- Parameters:

fevals_range – the range of function evaluations.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

fevals_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

fevals_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_curve_over_time(time_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over time.

- Parameters:

time_range – the range of time.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

time_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

time_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_split_times(range: ndarray, x_type: str, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each point in range split into objective_time_keys.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of range (either time or function evaluations).

searchspace_stats – Searchspace statistics object.

- Raises:

ValueError – on wrong

x_type.- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_feval(fevals_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each function eval in the range split into objective_time_keys.

- Parameters:

fevals_range – the range of function evaluations.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_time(time_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each time point in the range split into objective_time_keys.

- Parameters:

time_range – the range of time.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_standardised_curve(range: ndarray, strategy_curve: ndarray, x_type: str) ndarray[source]

Substract the baseline curve from the provided strategy curve, yielding a standardised strategy curve.

- class RandomSearchCalculatedBaseline(searchspace_stats: SearchspaceStatistics, include_nan=False, time_per_feval_operator: str = 'mean_per_feval', simulate: bool = False)[source]

Bases:

BaselineBaseline object using calculated random search without replacement.

- get_curve(range: ndarray, x_type: str, dist=None, confidence_level=None) ndarray[source]

Get the curve over the specified range of time or function evaluations.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of the x-axis range (either time or function evaluations).

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of NDArrays with NaN beyond limits. See

get_curve_over_fevals()andget_curve_over_time()for more precise return values.

- get_curve_over_fevals(fevals_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over function evaluations.

- Parameters:

fevals_range – the range of function evaluations.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

fevals_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

fevals_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_curve_over_time(time_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over time.

- Parameters:

time_range – the range of time.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

time_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

time_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_split_times(range: ndarray, x_type: str, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each point in range split into objective_time_keys.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of range (either time or function evaluations).

searchspace_stats – Searchspace statistics object.

- Raises:

ValueError – on wrong

x_type.- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_feval(fevals_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each function eval in the range split into objective_time_keys.

- Parameters:

fevals_range – the range of function evaluations.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_time(time_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each time point in the range split into objective_time_keys.

- Parameters:

time_range – the range of time.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_standardised_curve(range: ndarray, strategy_curve: ndarray, x_type: str) ndarray[source]

Substract the baseline curve from the provided strategy curve, yielding a standardised strategy curve.

- class RandomSearchSimulatedBaseline(searchspace_stats: SearchspaceStatistics, repeats: int = 500, limit_fevals: int = None, index=True, flatten=True, index_avg='mean', performance_avg='mean')[source]

Bases:

BaselineBaseline object using simulated random search.

- get_curve(range: ndarray, x_type: str, dist=None, confidence_level=None) ndarray[source]

Get the curve over the specified range of time or function evaluations.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of the x-axis range (either time or function evaluations).

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of NDArrays with NaN beyond limits. See

get_curve_over_fevals()andget_curve_over_time()for more precise return values.

- get_curve_over_fevals(fevals_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over function evaluations.

- Parameters:

fevals_range – the range of function evaluations.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

fevals_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

fevals_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_curve_over_time(time_range: ndarray, dist=None, confidence_level=None, return_split=False) ndarray[source]

Get the curve over time.

- Parameters:

time_range – the range of time.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

time_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

time_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_split_times(range: ndarray, x_type: str, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each point in range split into objective_time_keys.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of range (either time or function evaluations).

searchspace_stats – Searchspace statistics object.

- Raises:

ValueError – on wrong

x_type.- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_feval(fevals_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each function eval in the range split into objective_time_keys.

- Parameters:

fevals_range – the range of function evaluations.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_time(time_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each time point in the range split into objective_time_keys.

- Parameters:

time_range – the range of time.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

Caching module

Code regarding storage and retrieval of caches.

- class Results(numpy_arrays: list[ndarray])[source]

Bases:

objectObject containing the results for an optimization algorithm on a search space.

- class ResultsDescription(run_folder: Path, application_name: str, device_name: str, group_name: str, group_display_name: str, stochastic: bool, objective_time_keys: list[str], objective_performance_keys: list[str], minimization: bool)[source]

Bases:

objectObject to store a description of results and retrieve results for an optimization algorithm on a search space.

- is_same_as(other: ResultsDescription) bool[source]

Check for equality against another ResultsDescription object.

- Parameters:

other – the other ResultsDescription object.

- Raises:

NotImplementedError – when comparing against a not implemented type.

ValueError – when comparing against an unkown or incompatible version.

KeyError – when there is a difference in the keys.

- Returns:

whether this instance is equal to the provided

otherinstance.

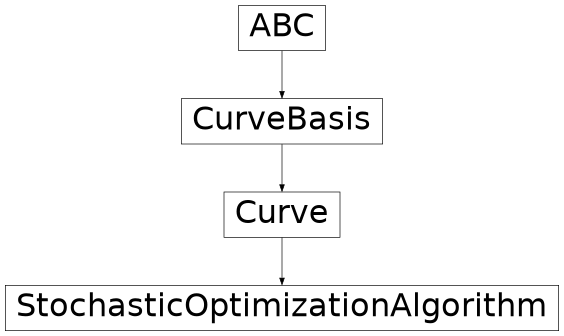

Curves module

Code for curve generation.

- class Curve(results_description: ResultsDescription)[source]

Bases:

CurveBasisThe Curve object can produce NumPy arrays directly suitable for plotting from a ResultsDescription.

- fevals_find_pad_width(array: ndarray, target_array: ndarray) tuple[int, int][source]

Finds the amount of padding required on both sides of

arrayto matchtarget_array.- Parameters:

array – input array to be padded.

target_array – target array shape.

- Raises:

ValueError – when arrays are not one-dimensional or there is a mismatch in the number of values.

- Returns:

The padded

arraywith the same shape astarget_array.

- get_isotonic_curve(x: ndarray, y: ndarray, x_new: ndarray, ymin=None, ymax=None) ndarray[source]

Get the isotonic regression curve fitted to x_new using package ‘sklearn’ or ‘isotonic’.

- Parameters:

x – x-dimension training data.

y – y-dimension training data.

x_new – x-dimension prediction data.

ymin – lower bound on the lowest predicted value. Defaults to None.

ymax – upper bound on the highest predicted value. Defaults to None.

- Returns:

The predicted monotonic piecewise curve for

x_new.

- get_isotonic_regressor(y_min: float, y_max: float, out_of_bounds: str = 'clip') IsotonicRegression[source]

Wrapper function to get the isotonic regressor.

- Parameters:

y_min – lower bound on the lowest predicted value. Defaults to -inf.

y_max – upper bound on the highest predicted value. Defaults to +inf.

out_of_bounds – handles how values outside the training domain are handled in prediction. Defaults to “clip”.

- Returns:

_description_

- get_scatter_data(x_type: str) tuple[ndarray, ndarray][source]

Get the raw data required for a scatter plot.

- Parameters:

x_type – the type of the x-axis range (either time or function evaluations).

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of two NumPy arrays for the range and data respectively.

- class CurveBasis[source]

Bases:

ABCAbstract object providing minimals for visualization and analysis. Implemented by

CurveandBaseline.- abstractmethod get_curve(range: ndarray, x_type: str, dist: ndarray = None, confidence_level: float = None, return_split: bool = True)[source]

Get the curve over the specified range of time or function evaluations.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of the x-axis range (either time or function evaluations).

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of NDArrays with NaN beyond limits. See

get_curve_over_fevals()andget_curve_over_time()for more precise return values.

- abstractmethod get_curve_over_fevals(fevals_range: ndarray, dist: ndarray = None, confidence_level: float = None, return_split: bool = True)[source]

Get the curve over function evaluations.

- Parameters:

fevals_range – the range of function evaluations.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

fevals_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

fevals_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- abstractmethod get_curve_over_time(time_range: ndarray, dist: ndarray = None, confidence_level: float = None, return_split: bool = True)[source]

Get the curve over time.

- Parameters:

time_range – the range of time.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

time_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

time_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- abstractmethod get_split_times(range: ndarray, x_type: str, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each point in range split into objective_time_keys.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of range (either time or function evaluations).

searchspace_stats – Searchspace statistics object.

- Raises:

ValueError – on wrong

x_type.- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- abstractmethod get_split_times_at_feval(fevals_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each function eval in the range split into objective_time_keys.

- Parameters:

fevals_range – the range of function evaluations.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- abstractmethod get_split_times_at_time(time_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each time point in the range split into objective_time_keys.

- Parameters:

time_range – the range of time.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- class StochasticOptimizationAlgorithm(results_description: ResultsDescription)[source]

Bases:

CurveClass for producing a curve for stochastic optimization algorithms.

- get_confidence_interval(values: ndarray, confidence_level: float) tuple[ndarray, ndarray][source]

Calculates the non-parametric confidence interval at each function evaluation across repeats.

Observations are assumed to be IID.

- Parameters:

values – the two-dimensional values to calculate the confidence interval on.

confidence_level – the confidence level for the confidence interval.

- Raises:

NotImplementedError – when weights is passed.

- Returns:

A tuple of two NumPy arrays with lower and upper error, respectively.

- get_curve(range: ndarray, x_type: str, dist: ndarray = None, confidence_level: float = None, return_split: bool = True)[source]

Get the curve over the specified range of time or function evaluations.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of the x-axis range (either time or function evaluations).

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Raises:

ValueError – on invalid

x_typeargument.- Returns:

A tuple of NDArrays with NaN beyond limits. See

get_curve_over_fevals()andget_curve_over_time()for more precise return values.

- get_curve_over_fevals(fevals_range: ndarray, dist: ndarray = None, confidence_level: float = None, return_split: bool = True)[source]

Get the curve over function evaluations.

- Parameters:

fevals_range – the range of function evaluations.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

fevals_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

fevals_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_curve_over_time(time_range: ndarray, dist: ndarray = None, confidence_level: float = None, return_split: bool = True, use_bagging=True)[source]

Get the curve over time.

- Parameters:

time_range – the range of time.

dist – the distribution, used for looking up indices. Ignored in

Baseline. Defaults to None.confidence_level – confidence level for the confidence interval. Ignored in

Baseline. Defaults to None.return_split – whether to return the arrays split at the real / fictional point. Defaults to True.

- Returns:

NumPy array of the baseline trajectory over the specified

time_range.The real_stopping_point_index and the real, fictional curve, errors over the specified

time_range.

- Return type:

Two possible returns, for

BaselineandCurverespectively

- get_split_times(range: ndarray, x_type: str, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each point in range split into objective_time_keys.

- Parameters:

range – the range of time or function evaluations.

x_type – the type of range (either time or function evaluations).

searchspace_stats – Searchspace statistics object.

- Raises:

ValueError – on wrong

x_type.- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_feval(fevals_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each function eval in the range split into objective_time_keys.

- Parameters:

fevals_range – the range of function evaluations.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_split_times_at_time(time_range: ndarray, searchspace_stats: SearchspaceStatistics) ndarray[source]

Get the times at each time point in the range split into objective_time_keys.

- Parameters:

time_range – the range of time.

searchspace_stats – Searchspace statistics object.

- Returns:

A NumPy array of size (len(objective_time_keys), len(range)).

- get_indices_in_array(values: ndarray, array: ndarray) ndarray[source]

Function to get the indices in an array in an efficient manner.

For each value, get the index (position) in the 1D array. More general version of

get_indices_in_distribution(), first sorts array and reverses the sort on the result.- Parameters:

values – the values to get the indices of.

array – the array to look up the indices in.

- Returns:

A NumPy integer array with the same shape as

values, containing the indices.

- get_indices_in_distribution(draws: ndarray, dist: ndarray, sorter=None, skip_draws_check: bool = False, skip_dist_check: bool = False) ndarray[source]

Function to get the indices in a distribution in an efficient manner.

For each draw, get the index (position) in the ascendingly sorted distribution. For unsorted dists, use get_indices_in_array().

- Parameters:

draws – the values to get the indices of.

dist – the distribution, assumed to be ascendingly sorted unless sorter is provided.

sorter – NumPy array of indices that sort the distribution. Defaults to None.

skip_draws_check – skips checking that each value in draws is in the dist. Defaults to False.

skip_dist_check – skips checking that the distribution is correctly ordered. Defaults to False.

- Returns:

A NumPy array of type float of the same shape as draws, with NaN where not found in dist.

- moving_average(y: ndarray, window_size=3) ndarray[source]

Function to calculate the moving average over an array.

Output array is the same size as input array. After https://stackoverflow.com/a/47490020.

- Parameters:

y – the 1-dimensional array to calculate the moving average over.

window_size – the moving average window size. Defaults to 3.

- Returns:

the smoothed 1-dimensional array with the same size as input array.

Experiments module

Main experiments code.

- calculate_budget(group: dict, statistics_settings: dict, searchspace_stats: SearchspaceStatistics) dict[source]

Calculates the budget for the experimental group, given cutoff point provided in experiments setup file.

- Parameters:

group – a dictionary with settings for experimental group

statistics_settings – a dictionary with settings related to statistics

searchspace_stats – a SearchspaceStatistics instance with cutoff points determined from related full search space files

- Returns:

A modified group dictionary.

- execute_experiment(filepath: str, profiling: bool = False, full_validate_on_load: bool = True)[source]

Executes the experiment by retrieving it from the cache or running it.

- Parameters:

filepath – path to the experiments .json file.

profiling – whether profiling is enabled. Defaults to False.

full_validate_on_load – whether to fully validate the searchspace statistics file on load. Defaults to True.

- Raises:

FileNotFoundError – if the path to the kernel specified in the experiments file is not found.

- Returns:

A tuple of the experiment dictionary, the experimental groups executed, the dictionary of

Searchspace statisticsand the resulting list ofResultsDescription.

- generate_all_experimental_groups(search_strategies: list[dict], experimental_groups_defaults: dict, parent_folder_path: Path) list[dict][source]

Generates all experimental groups for the experiment as a combination of given applications, gpus and search strategies from experiments setup file.

- Parameters:

search_strategies – list of dictionaries with settings for various search strategies from experiments setup file, section search_strategies.

experimental_groups_defaults – a dictionary with default settings for experimental groups from experiments setup file, section experimental_groups_defaults.

parent_folder_path – path to experiment parent folder that stores all files generated in the experiment.

- Returns:

A list of dictionaries, one for each experimental group.

- generate_experiment_file(name: str, parent_folder: Path, search_strategies: list[dict], applications: list[dict] = None, gpus: list[str] = None, override: dict = None, generate_unique_file=False, overwrite_existing_file=False)[source]

Creates an experiment file based on the given inputs and opinionated defaults.

- generate_input_file(group: dict)[source]

Creates a input json file specific for a given application, gpu and search method.

- Parameters:

group – dictionary with settings for a given experimental group.

- get_args_from_cli(args=None) str[source]

Set the Command Line Interface arguments definitions, get and return the argument values.

- Parameters:

args – optional list of arguments for testing without CLI interaction. Defaults to None.

- Raises:

ValueError – on invalid argument.

- Returns:

The filepath to the experiments file.

- get_experiment(filename: str) dict[source]

Validates and gets the experiment from the experiments .json file.

- Parameters:

filename – path to the experiments .json file.

- Returns:

Experiment dictionary object.

- get_experimental_groups(experiment: dict) list[dict][source]

Prepares all the experimental groups as all combinations of application and gpus (from experimental_groups_defaults) and big experimental groups from setup file (experimental_groups, usually search methods). Check additional settings for each experimental group. Prepares the directory structure for the whole experiment.

- Parameters:

experiment – the experiment dictionary object.

- Returns:

The experimental groups in the experiment dictionary object.

- get_full_search_space_filename_from_input_file(input_filename: Path) Path[source]

Returns a path to full search space file that is provided in the input json file in KernelSpecification.SimulationInput.

- Parameters:

input_filename – path to input json file.

- Raises:

KeyError – if the path is not provided, but is expected.

- Returns:

A path to full search space file that was written in the input json file.

- get_full_search_space_filename_from_pattern(pattern: dict, gpu: str, application_name: str) Path[source]

Returns a path to full search space file that is generated from the pattern provided in experiments setup file.

- Parameters:

pattern – pattern regex string

gpu – name of the gpu, needs to be plugged into the pattern

application_name – name of the application, needs to be plugged into the pattern

- Raises:

NotImplementedError – if the regex expects other variables than just application name and gpu.

- Returns:

A path to full search file generated from the pattern.

Runner module

Interface to run an experiment on the auto-tuning frameworks.

- collect_results(input_file, group: dict, results_description: ResultsDescription, searchspace_stats: SearchspaceStatistics, profiling: bool, compress: bool = True) ResultsDescription[source]

Executes optimization algorithms on tuning problems to capture their behaviour.

- Parameters:

input_file – an input json file to tune.

group – a dictionary with settings for experimental group.

results_description – the

ResultsDescriptionobject to write the results to.searchspace_stats – the

SearchspaceStatisticsobject, used for conversion of imported runs.profiling – whether profiling statistics must be collected.

compress – whether the results should be compressed.

- Returns:

The

ResultsDescriptionobject with the results.

- get_kerneltuner_results_and_metadata(filename_results: str = '/opt/hostedtoolcache/Python/3.12.11/x64/lib/python3.12../last_run/_tune_configuration-results.json', filename_metadata: str = '/opt/hostedtoolcache/Python/3.12.11/x64/lib/python3.12../last_run/_tune_configuration-metadata.json') tuple[list, list][source]

Load the results and metadata files (relative to kernel directory) in accordance with the defined T4 standards.

- Parameters:

filename_results – filepath relative to kernel. Defaults to “../last_run/_tune_configuration-results.json”.

filename_metadata – filepath relative to kernel. Defaults to “../last_run/_tune_configuration-metadata.json”.

- Returns:

A tuple of the results and metadata lists respectively.

- temporary_working_directory_change(new_wd: Path)[source]

Temporarily change to the given working directory in a context. Based on https://stackoverflow.com/questions/75048986/way-to-temporarily-change-the-directory-in-python-to-execute-code-without-affect.

- Parameters:

new_wd – path of the working directory to temporarily change to.

- tune(input_file, application_name: str, device_name: str, group: dict, objective: str, objective_higher_is_better: bool, profiling: bool, searchspace_stats: SearchspaceStatistics) tuple[list, list, int][source]

Tune a program using an optimization algorithm and collect the results.

Optionally collects profiling statistics.

- Parameters:

input_file – the json input file for tuning the application.

application_name – the name of the program to tune.

device_name – the device (GPU) to tune on.

group – the experimental group (usually the search method).

objective – the key to optimize for.

objective_higher_is_better – whether to maximize or minimize the objective.

profiling – whether profiling statistics should be collected.

searchspace_stats – a

SearchspaceStatisticsobject passed to convert imported runs.

- Raises:

ValueError – if tuning fails multiple times in a row.

- Returns:

A tuple of the metadata, the results, and the total runtime in milliseconds.

- write_results(repeated_results: list, results_description: ResultsDescription, compressed=False)[source]

Combine the results and write them to a NumPy file.

- Parameters:

repeated_results – a list of tuning results, one per tuning session.

results_description – the

ResultsDescriptionobject to write the results to.compressed – whether the repeated_results are compressed.

Searchspace statistics module

Code for obtaining search space statistics.

- class SearchspaceStatistics(application_name: str, device_name: str, minimization: bool, objective_time_keys: list[str], objective_performance_keys: list[str], full_search_space_file_path: str, full_validate: bool = True)[source]

Bases:

objectObject for obtaining information from a full search space file.

- T4_time_keys_to_kernel_tuner_time_keys(time_keys: list[str]) list[str][source]

Temporary utility function to use the kernel tuner search space files with the T4 output format.

- Parameters:

time_keys – list of T4-style time keys.

- Returns:

List of Kernel Tuner-style time keys.

- T4_time_keys_to_kernel_tuner_time_keys_mapping = {'compilation': 'compile_time', 'framework': 'framework_time', 'runtimes': 'benchmark_time', 'search_algorithm': 'strategy_time', 'validation': 'verification_time'}

- cutoff_point(cutoff_percentile: float) tuple[float, int][source]

Calculates the cutoff point.

- Parameters:

cutoff_percentile – the desired cutoff percentile to reach before stopping.

- Returns:

A tuple of the objective value at the cutoff point and the fevals to the cutoff point.

- cutoff_point_fevals_time(cutoff_percentile: float) tuple[float, int, float][source]

Calculates the cutoff point.

- Parameters:

cutoff_percentile – the desired cutoff percentile to reach before stopping.

- Returns:

A tuple of the objective value at cutoff point, fevals to cutoff point, and the mean time to cutoff point.

- cutoff_point_fevals_time_start_end(cutoff_percentile_start: float, cutoff_percentile: float) tuple[int, int, float, float][source]

Calculates the cutoff point for both the start and end, and ensures there is enough margin between the two.

- Parameters:

cutoff_percentile_start – the desired cutoff percentile to reach before starting the plot.

cutoff_percentile – the desired cutoff percentile to reach before stopping.

- Returns:

A tuple of the fevals to cutoff point start and end, and the mean time to cutoff point start and end.

- cutoff_point_time_from_fevals(cutoff_point_fevals: int) float[source]

Calculates the time to the cutoff point from the number of function evaluations.

- Parameters:

cutoff_point_fevals – the number of function evaluations to reach the cutoff point.

- Returns:

The time to the cutoff point.

- get_num_duplicate_values(value: float) int[source]

Get the number of duplicate values in the searchspace.

- get_time_per_feval(time_per_feval_operator: str, strategy_offset=False) float[source]

Gets the average time per function evaluation. Several methods available.

- Parameters:

time_per_feval_operator – method to use.

strategy_offset – whether to include a small offset to adjust for missing strategy time.

- Raises:

ValueError – if invalid

time_per_feval_operatoris passed.- Returns:

The average time per function evaluation.

- get_valid_filepath() Path[source]

Returns the filepath to the Searchspace statistics .json file if it exists.

- Raises:

FileNotFoundError – if filepath does not exist.

- Returns:

Filepath to the Searchspace statistics .json file.

- kernel_tuner_time_keys_to_T4_time_keys_mapping = {'benchmark_time': 'runtimes', 'compile_time': 'compilation', 'framework_time': 'framework', 'strategy_time': 'search_algorithm', 'verification_time': 'validation'}

- mean_strategy_time_per_feval() float[source]

Gets the average time spent on the strategy per function evaluation.

- objective_performance_at_cutoff_point(cutoff_percentile: float) float[source]

Calculate the objective performance value at which to stop for a given cutoff percentile.

- Parameters:

cutoff_percentile – the desired cutoff percentile to reach before stopping.

- Returns:

The objective performance value at which to stop.

- objective_performances: dict

- objective_performances_array: ndarray

- objective_performances_total: ndarray

- objective_performances_total_sorted: ndarray

- objective_performances_total_sorted_nan: ndarray

- objective_times: dict

- objective_times_array: ndarray

- objective_times_total: ndarray

- objective_times_total_sorted: ndarray

- plot_histogram(cutoff_percentile: float)[source]

Plots a histogram of the distribution.

- Parameters:

cutoff_percentile – the desired cutoff percentile to reach before stopping.

- repeats: int

- size: int

- total_performance_absolute_optimum() float[source]

Calculate the absolute optimum of the total performances.

- Returns:

Absolute optimum of the total performances.

- total_performance_interquartile_range() float[source]

Get the interquartile range of total performance.

- total_performance_quartiles() tuple[float, float][source]

Get the quartiles (25th and 75th percentiles) of total performance.

- total_time_mean_per_feval() float[source]

Get the true mean per function evaluation by adding the chance of an invalid.

- total_time_median_per_feval() float[source]

Get the true median per function evaluation by adding the chance of an invalid.

- convert_from_time_unit(value, from_unit: str)[source]

Convert the value or list of values from the specified time unit to seconds.

- filter_invalids(values, performance: bool) list[source]

Filter out invalid values from the array.

Assumes that values is a list or array of values. If changes are made here, also change is_invalid_objective_time.

- is_not_invalid_value(value, performance: bool) bool[source]

Checks if a performance or time value is an array or is not invalid.

- nansumwrapper(array: ndarray, **kwargs) ndarray[source]

Wrapper around np.nansum. Ensures partials that contain only NaN are returned as NaN instead of 0.

- Parameters:

array – values to sum.

**kwargs – arbitrary keyword arguments.

- Returns:

Summed NumPy array.

- to_valid_array(results: list[dict], key: str, performance: bool, from_time_unit: str = None, replace_missing_measurement_from_times_key: str = None) ndarray[source]

Convert results performance or time values to a numpy array, sum if the input is a list of arrays.

replace_missing_measurement_from_times_key: if key is missing from measurements, use the mean value from times.

Visualize experiments module

Visualize the results of the experiments.

- class Visualize(experiment_filepath: str, save_figs=True, save_extra_figs=False, continue_after_comparison=False, compare_extra_baselines=False, use_strategy_as_baseline=None)[source]

Bases:

objectClass for visualization of experiments.

- get_head2head_comparison_data(aggregation_data: dict, compare_at_relative_time: float, comparison_unit: str) dict[source]

Gets the data for a head-to-head comparison of strategies across all searchspaces.

- get_head2head_comparison_data_searchspace(x_type: str, compare_at_relative_time: float, comparison_unit: str, searchspace_stats: SearchspaceStatistics, strategies_curves: list[Curve], x_axis_range: ndarray) dict[source]

Gets the data for a head-to-head comparison of strategies on a specific searchspace.

- Parameters:

x_type – the type of

x_axis_range.compare_at_relative_time – the relative point in time to compare at, between 0.0 and 1.0.

comparison_unit – the unit to compare with, ‘time’ or ‘objective’.

searchspace_stats – the Searchspace statistics object.

strategies_curves – the strategy curves to draw in the plot.

x_axis_range – the time or function evaluations range to plot on.

- Returns:

A doubly-nested dictionary with strategy names as keys and how much better outer performs relative to inner.

- get_x_axis_label(x_type: str, objective_time_keys: list)[source]

Formatter to get the appropriate x-axis label depending on the x-axis type.

- Parameters:

x_type – the type of a range, either time or function evaluations.

objective_time_keys – the objective time keys used.

- Raises:

ValueError – when an invalid

x_typeis given.- Returns:

The formatted x-axis label.

- plot_baselines_comparison(time_range: ndarray, searchspace_stats: SearchspaceStatistics, objective_time_keys: list, strategies_curves: list[Curve], confidence_level: float, title: str = None, save_fig=False)[source]

Plots a comparison of baselines on a time range.

Optionally also compares against strategies listed in strategies_curves.

- Parameters:

time_range – range of time to plot on.

searchspace_stats – Searchspace statistics object.

objective_time_keys – objective time keys.

strategies_curves – the strategy curves to draw in the plot.

confidence_level – the confidence interval used for the confidence / prediction interval.

title – the title for this plot, if not given, a title is generated. Defaults to None.

save_fig – whether to save the resulting figure to file. Defaults to False.

- plot_split_times_bar_comparison(x_type: str, fevals_or_time_range: ndarray, searchspace_stats: SearchspaceStatistics, objective_time_keys: list[str], title: str = None, strategies_curves: list[Curve] = [], print_table_format=True, print_skip=['validation'], save_fig=False)[source]

Plots a bar chart comparison of the average split times for strategies over the given range.

- Parameters:

x_type – the type of

fevals_or_time_range.fevals_or_time_range – the time or function evaluations range to plot on.

searchspace_stats – the Searchspace statistics object.

objective_time_keys – the objective time keys.

title – the title for this plot, if not given, a title is generated. Defaults to None.

strategies_curves – the strategy curves to draw in the plot. Defaults to list().

print_table_format – print a LaTeX-formatted table. Defaults to True.

print_skip – list of

time_keysto be skipped in the printed table. Defaults to [“verification_time”].save_fig – whether to save the resulting figure to file. Defaults to False.

- plot_split_times_comparison(x_type: str, fevals_or_time_range: ndarray, searchspace_stats: SearchspaceStatistics, objective_time_keys: list, title: str = None, strategies_curves: list[Curve] = [], save_fig=False)[source]

Plots a comparison of split times for strategies and baselines over the given range.

- Parameters:

x_type – the type of

fevals_or_time_range.fevals_or_time_range – the time or function evaluations range to plot on.

searchspace_stats – the Searchspace statistics object.

objective_time_keys – the objective time keys.

title – the title for this plot, if not given, a title is generated. Defaults to None.

strategies_curves – the strategy curves to draw in the plot. Defaults to list().

save_fig – whether to save the resulting figure to file. Defaults to False.

- Raises:

ValueError – on unexpected strategies curve instance.

- plot_strategies(style: str, x_type: str, y_type: str, ax: Axes, searchspace_stats: SearchspaceStatistics, strategies_curves: list[Curve], x_axis_range: ndarray, plot_settings: dict, baseline_curve: Baseline = None, baselines_extra: list[Baseline] = [], plot_errors=True, plot_cutoffs=False)[source]

Plots all optimization strategies for individual search spaces.

- Parameters:

style – the style of plot, either ‘line’ or ‘scatter’.

x_type – the type of

x_axis_range.y_type – the type of plot on the y-axis.

ax – the axis to plot on.

searchspace_stats – the Searchspace statistics object.

strategies_curves – the strategy curves to draw in the plot. Defaults to list().

x_axis_range – the time or function evaluations range to plot on.

plot_settings – dictionary of additional plot settings.

baseline_curve – the

Baselineto be used as a baseline in the plot. Defaults to None.baselines_extra – additional

Baselinecurves to compare against. Defaults to list().plot_errors – whether errors (confidence / prediction intervals) are visualized. Defaults to True.

plot_cutoffs – whether the cutoff points for early stopping algorithms are visualized. Defaults to False.

- plot_strategies_aggregated(ax: Axes, aggregation_data, visualization_settings: dict = {}, plot_settings: dict = {}) float[source]

Plots all optimization strategies combined accross search spaces.

- Parameters:

ax – the axis to plot on.

aggregation_data – the aggregated data from the various searchspaces.

visualization_settings – dictionary of additional visualization settings.

plot_settings – dictionary of additional visualization settings related to this particular plot.

- Returns:

The lowest performance value of the real stopping point for all strategies.

- plot_x_value_types = ['fevals', 'time', 'aggregated']

- plot_y_value_types = ['absolute', 'scatter', 'normalized', 'baseline']

- x_metric_displayname = {'aggregate_time': 'Relative time to cutoff point', 'fevals': 'Number of function evaluations used', 'time_partial_benchmark_time': 'kernel runtime', 'time_partial_compilation': 'compile time', 'time_partial_compile_time': 'compile time', 'time_partial_framework': 'framework time', 'time_partial_framework_time': 'framework time', 'time_partial_runtimes': 'kernel runtime', 'time_partial_search_algorithm': 'strategy time', 'time_partial_strategy_time': 'strategy time', 'time_partial_times': 'kernel runtime', 'time_partial_verification_time': 'verification time', 'time_total': 'Total time in seconds'}

- y_metric_displayname = {'GFLOP/s': 'GFLOP/s', 'aggregate_objective': 'Aggregate best-found objective value relative to baseline', 'aggregate_objective_max': 'Aggregate improvement over random sampling', 'objective_absolute': 'Best-found objective value', 'objective_baseline': 'Best-found objective value\n(relative to baseline)', 'objective_baseline_max': 'Improvement over random sampling', 'objective_normalized': 'Best-found objective value\n(normalized from median to optimum)', 'objective_relative_median': 'Fraction of absolute optimum relative to median', 'objective_scatter': 'Best-found objective value', 'time': 'Best-found kernel time in milliseconds'}

- get_colors(strategies: list[dict]) list[source]

Assign colors using the tab10 colormap, with lighter shades for children.

- get_colors_old(strategies: list[dict], scale_margin_left=0.4, scale_margin_right=0.15) list[source]

Function to get the colors for each of the strategies.